In the journey toward advancing healthcare research, trust in AI tools is essential. Truveta is committed to not only delivering powerful AI-driven data insights but also ensuring transparency, quality, and speed for researchers. A significant part of this commitment is Tru, our newly announced AI research assistant in Truveta Studio. Tru enables researchers to accelerate their research using simple, natural language questions. Here’s an in-depth look at Tru and how it’s designed to elevate research productivity and trust in AI.

What is Tru?

Tru is a research assistant in Truveta Studio powered by generative AI and trained on the Truveta Language Model (TLM) and Truveta Data and knowledge. It enables researchers to accelerate their research in Truveta Studio using simple, natural language questions.

Using Tru, researchers can quickly identify code sets and iteratively build precise population definitions, enabling them to develop, refine, and advance scientifically rigorous research faster. Tru can be used to develop hypotheses as researchers can discover trends through iterative prompts and data visualizations. Tru also provides transparency into the data sources and underlying code sets behind its responses.

Context and memory

Tru has conversational memory and understands the context of the previous conversation, so the researcher can interact with it in a natural way. Moreover, it keeps a record of all previous conversations it has had with a researcher (including generated results and visualizations), and the researcher can pick up and continue any previous conversation. We are also working on making Tru aware of the user’s activity in the rest of Truveta Studio, which will further reduce the burden on the user to ask explicit questions with detailed information.

How is Tru architected?

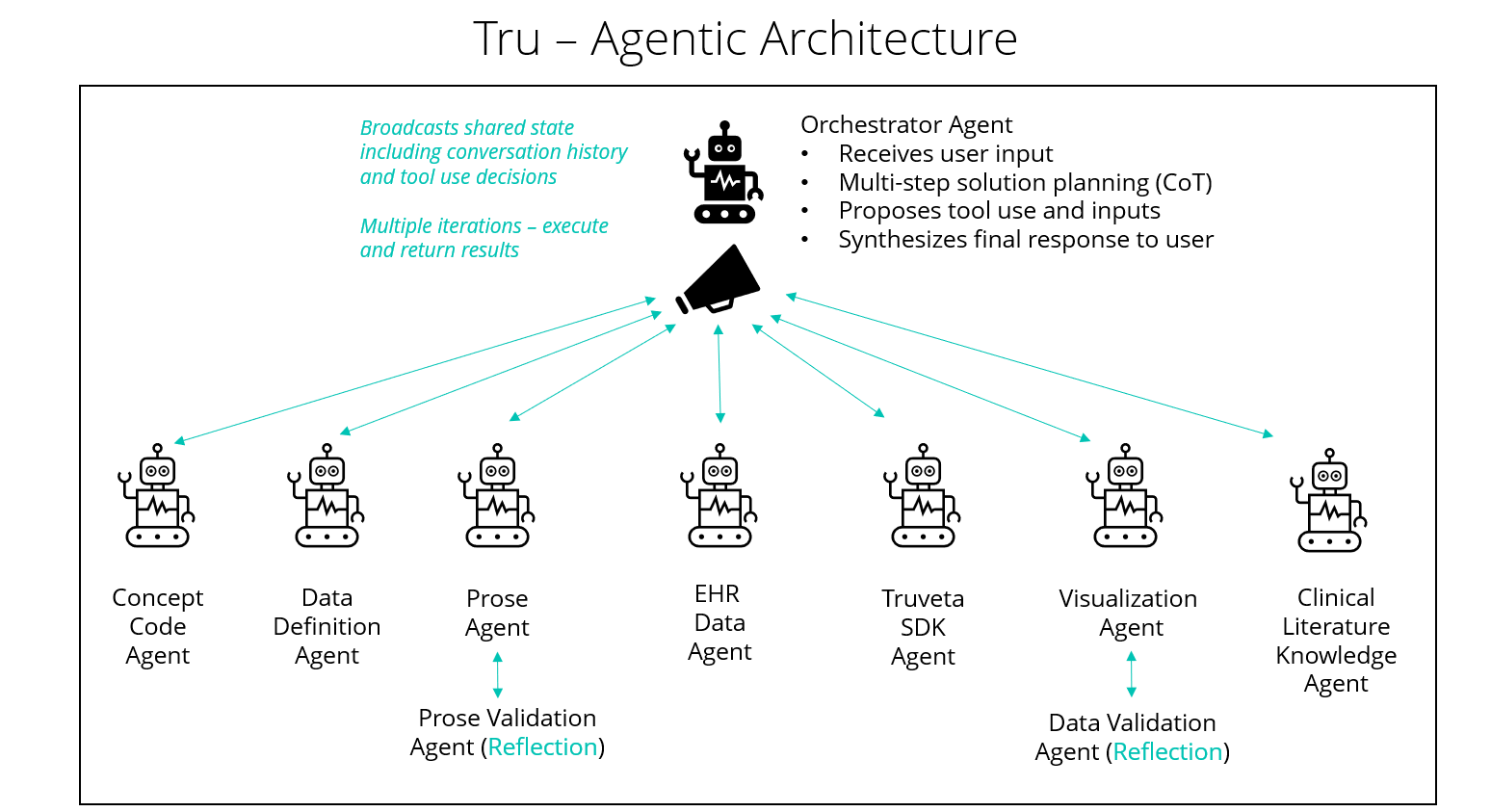

Tru is built on an agentic framework. It utilizes a set of specialized agents, each proficient in a specific task such as creating clinical code sets for defining phenotypes, writing Prose queries for building populations, retrieving and formatting EHR data, visualizing insights, and accessing knowledge from clinical literature and guidelines. (Prose is Truveta’s language for building populations based on clinical inclusion and exclusion criteria.) Each agent uses an LLM. The user interface is provided through a specialized agent called an orchestrator that converses with the researcher in natural language, performs chain-of-thought (CoT) multi-step planning, provides tool-use suggestions, accesses other agents as appropriate, and synthesizes responses for the user. The primary reason for using an agentic framework is that it gives us the ability to reduce hallucinations and improve quality in specific areas as required by utilizing trusted sources of knowledge and tuning each agent for the task that it is built for.

How does Tru optimize and adapt agents?

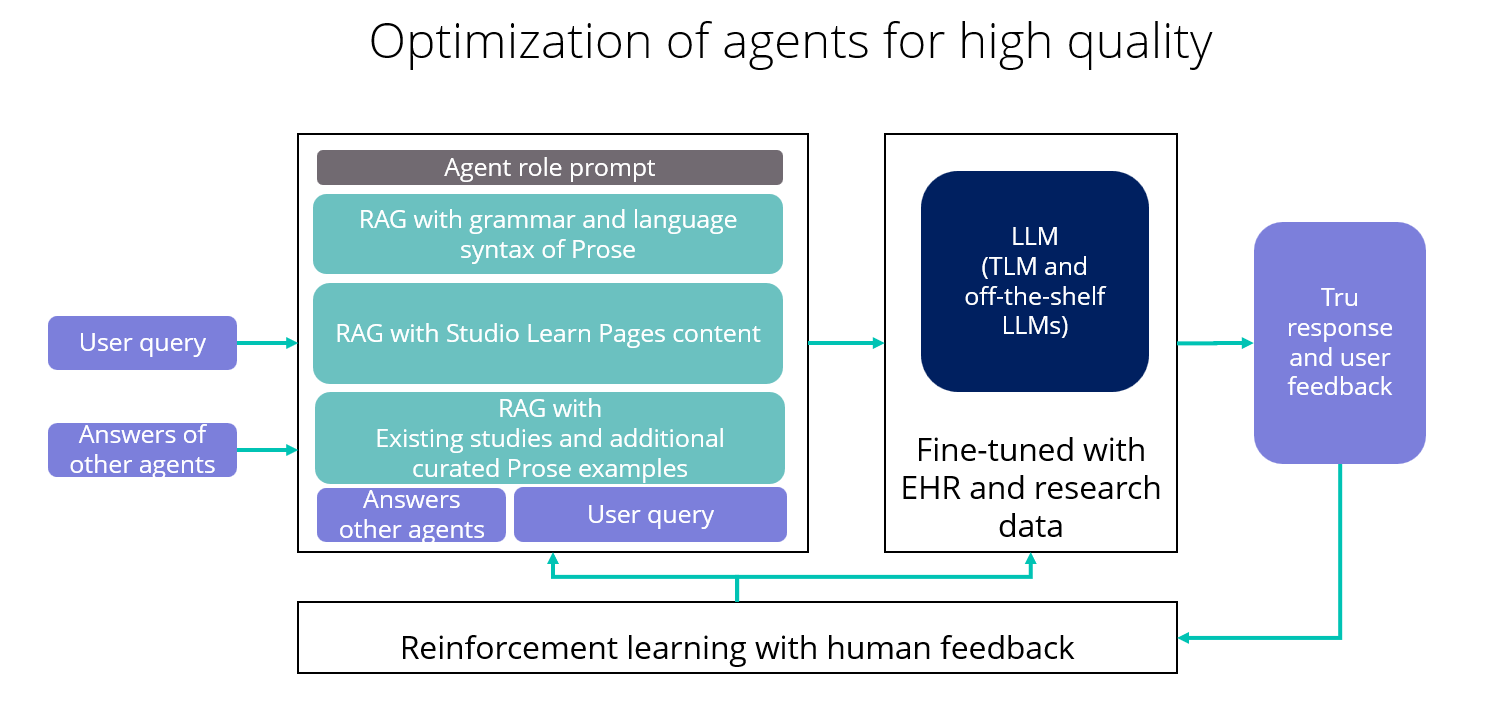

Tru has built-in mechanisms for enhancing LLM reasoning & generalization in each agent. It is based on three complimentary approaches:

- Retrieval augmented generation (RAG) aids LLM reasoning with precise, relevant contextual information derived from authoritative sources.

- Fine-tuning of the LLM improves relevance of responses to user queries by learning from authoritative input-output examples.

- Reinforcement learning with human feedback (RLHF) enables adaptation and self-correction in a continuous learning loop. It also enables personalization of the responses to specific user needs.

For example, let’s see how the Prose agent implements these techniques (see the figure above). The aim of the Prose agent is to convert a natural language description of a phenotype to an executable and clinically correct Prose query for that population. As a generic step, the agent first includes the technical knowledge of Prose grammar and syntax in the prompt. Then the agent pulls contextual Prose code from existing studies and curated examples in Truveta Library that are relevant to the user query. It also brings information from the help documentation that is relevant to the query. It can rely on other agents such as the Code Concept Agent to get appropriate code sets for clinical concepts found in the user query. With this rich context in place, the agent now posits the user query to the LLM. The LLM itself is also fine-tuned with input-output example of user queries and corresponding curated output of Prose code. Thus, it has a good chance of generating the correct code for the required population. Moreover, it can provide the detailed reasoning and citations of the knowledge sources it has used in the process, which is critical for generating trust.

Mistakes do happen sometimes, and the user has a chance to provide feedback on the output either as a simple “thumbs up/down” or detailed textual feedback. Both are processed by the RLHF framework and used to tune the RAG hyper-parameters and policies as well as to tune the LLM. A similar pattern holds for all the agents in the agentic network.

Learnings from real-world usage

For the last few months, researchers from Truveta Research as well as selected customers have been using Tru on a trial basis. The learnings from this real-world usage are valuable to help us rapidly improve the quality of the assistive technology. We have made several improvements based on user feedback, such as:

- Agent visibility: Give a detailed in-line view of which agents are being consulted as part of the workflow being executed to answer a user query.

- Faster turnaround and streaming results: Reduce turnaround time by using performant LLMs, and stream partial results as they become available during workflow execution, so the user has a more enjoyable experience. They can also terminate the workflow and reframe the question if needed.

- Knowledge source transparency: Provide deep citations and hyperlinks to knowledge sources used by various agents.

- Provide intermediate results and explanations: Provide access to intermediate explanatory information such as code-sets and Prose/Python/SQL code even when the user’s question does not explicitly ask for it.

Quality measurement

Consistently measuring the quality of an assistive technology is essential for rapid improvement. However, it is a challenging problem because in many cases there is no single correct answer to a question. Sometimes, a partially correct answer can still be valuable to unblocking a user. The universe of possible questions is essentially infinite, and it is not feasible to preemptively measure every scenario.

With the aid of our in-house research team, we have developed a benchmark set of high-value tasks along with the ground truth of the correct answers. We use expert human evaluation to assess the quality of the answers and explanations on a scale of 0-10, and we measure the ensemble statistics of the answers and compare them with expected thresholds. We conduct these human evaluations for each major iteration of the system, assessing aspects such as hallucination/accuracy, explainability, and reproducibility. We approve updates to the production system only if the quality bar is achieved. To meet the quality bar, we model features that allow us to control hallucination and to reduce bias and improper answers, such as controlling temperature, top-p sampling, content moderation and system messages. The system is encouraged to refuse to provide an answer to questions that are outside the scope of allowed topics.

Tru is an exciting new addition to Truveta Studio, and we hope to learn and evolve the assistive technology to support our customers’ needs and democratize access to real-world healthcare insights for all.