In the final article in this series, I will share some thoughts on meeting the following challenge:

How to rapidly support new AI scenarios and adjacencies through reuse and transfer learning?

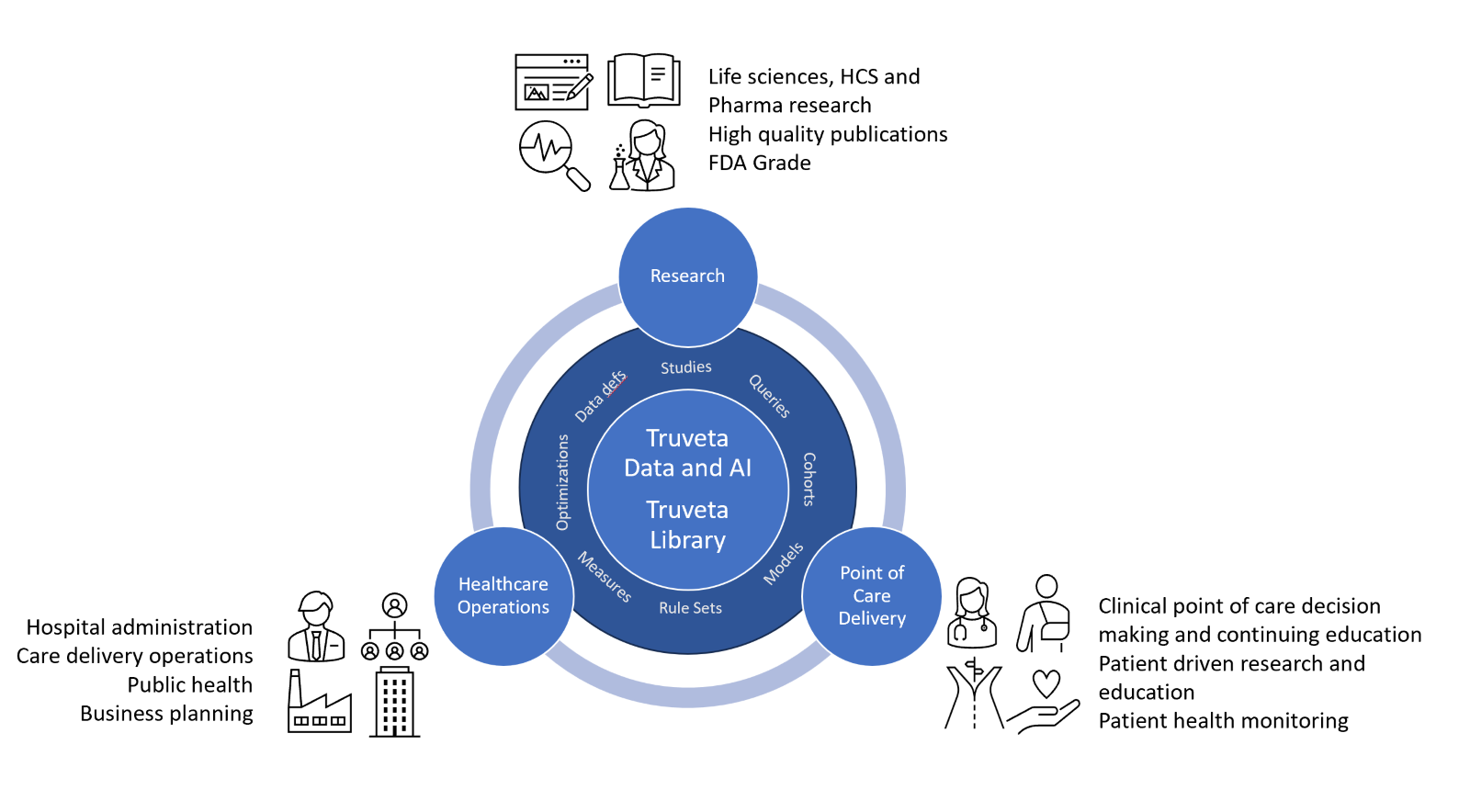

Truveta has diverse, multimodal, and up-to-date data on clinical healthcare in the US, sourced directly from electronic health records at hospitals. We use state-of-the-art AI models and systems for data transformation, extraction, normalization, and deidentification. Additionally, our platform produces auditable run-time evidence for data quality and correctness.

Truveta Data can be used for a broad swath of research areas including safety and effectiveness, health economics outcomes research, clinical trials, AI model training, and market access. Moreover, we also want to serve adjacent application areas such as improving the quality of clinical care decision-making at point of care delivery, and optimizing healthcare operations, as shown in the figure above. Therefore, we are building a platform that is modular in a way that scales and supports all these adjacencies. I would like to provide you with an overview of how we are purposefully achieving that through our AI architecture.

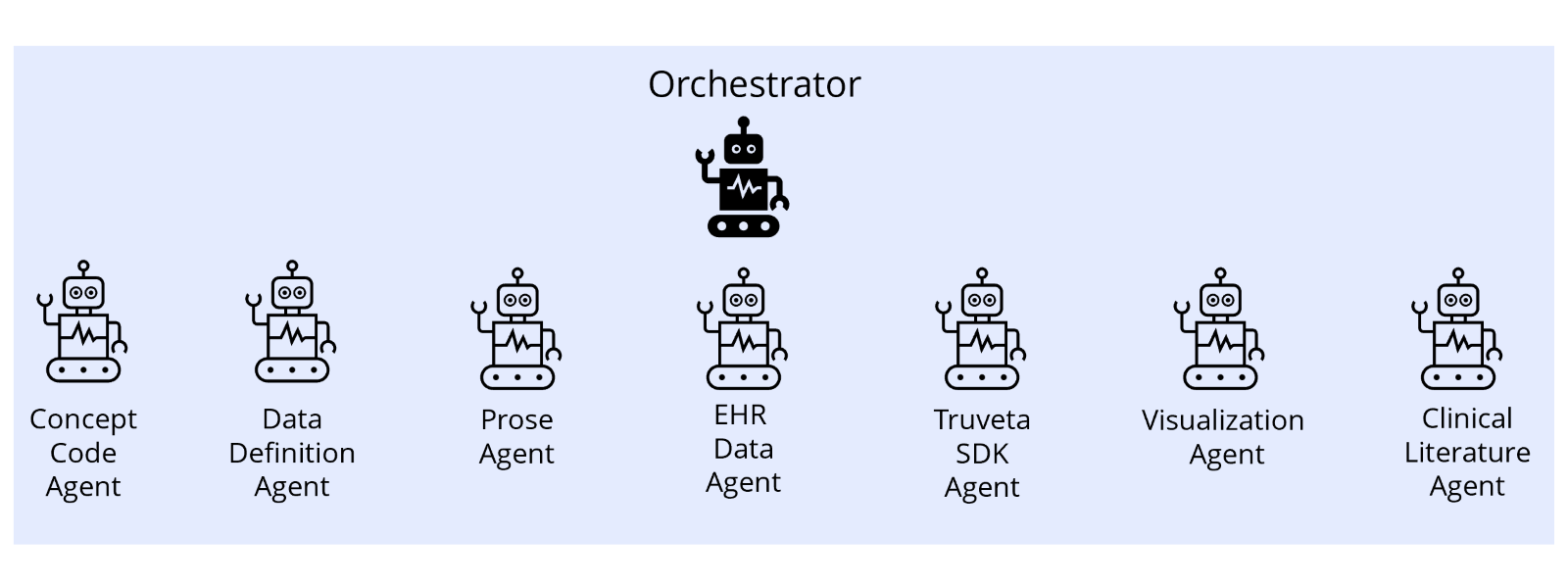

Intelligence network of AI agents

As we developed our AI platform, we converged on an architecture that scales and supports a variety of applications. It takes the form of an intelligence network of AI agents that can collaboratively address a diverse set of knowledge tasks. This network can be evolved and updated for changing needs. It also has intrinsic properties of plasticity due to transfer learning. It is not a coincidence that this agentic framework has similarities to the architecture of the human brain.

Inspiration from the human brain

As has been known for some time, deep learning is an important feature of the human (and animal) brain. However, what is equally interesting is that the brain is not a monolithic knowledge-processing engine. It is a complex organ with specialized regions dedicated to various cognitive functions.

Evolutionary neurobiologists (for example) hypothesize that evolutionary pressures favored such a brain architecture because it is optimal for fast learning and information processing under sparse supervision, while providing energy efficiency and adaptability to a changing environment. At the same time, it also has another remarkable property, namely neural plasticity, where the specialized regions can take on additional responsibilities when required.

While its specific regions have specialized roles, the brain nevertheless operates as an integrated network. Different areas communicate and collaborate to achieve complex cognitive tasks. The prefrontal cortex in particular plays a central role in orchestrating thoughts, emotions, and behaviors which allows us to plan, reason, and make decisions.

Designing an AI intelligence network of agents

Nature provides ample evidence and inspiration for a deep and specialized network approach to intelligence. It may well be the case that one day ML researchers will come up with a new generation of evolutionary training methods that evolve such a network in a self-organizing way. But we are not quite there yet. As of now, in state-of-the-art AI applications, we need to proactively engineer such networks.

As we engineer the network we want to keep two important principles top of mind:

Autonomy: Having specialized deep learning agents for specific tasks rather than building a monolith deep network. This naturally translates to the “model graph” design paradigm that we have already discussed in an earlier post. Such an architecture allows us to make the best use of available sparse supervision data, control hallucination, and make rapid improvements through reinforcement from experts to solve specific problem areas comprehensively.

For example, we can develop one agent that is specialized in creating code sets by cross-walking many ontologies and looking for semantic synonymity, and then this agent can be used in multiple scenarios whenever the question of accurately defining a phenotype needs to be answered: during clinical research to define populations for gathering real-world data, during clinical point of care when looking for “patients like this one” to choose appropriate clinical pathways, and in health care operations when there is a need to estimate the impact of operational decisions on various populations being served.

Plasticity: Even if each agent is autonomous and specialized, it should retain the ability to learn new tricks and abilities, and take on adjacent roles, especially when other parts of the network degrade. This transfer learning is important for resilience and adaptability. It is noteworthy that autonomy naturally enables plasticity because different agents can learn to do the same task without each other’s knowledge or assistance and then step in whenever required.

For example, when we already have an agent that is specialized in writing Python code after having learned from the code examples of millions of users, it can also easily write adequate code in other object-oriented programming (OOP) languages with sparse/few-shot learning, because it has understood the basic semantics of coding with a rich OOP language. This makes it relatively easy to support new scenarios that utilize new or proprietary languages, and encourages the development of such languages.

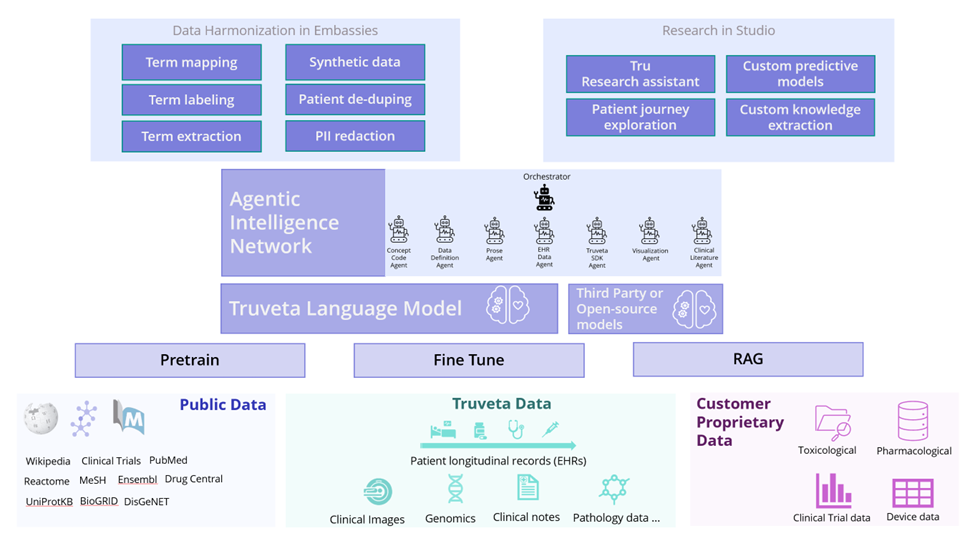

We have many degrees of freedom as we engineer networks that can achieve the above properties. We use off-the-shelf pre-trained models where possible, as well as pre-training our own models where needed. We then use techniques such as retrieval augmented generation (RAG) as well as fine-tuning for the agent-specific specialization. We apply techniques of model distillation to reduce cost and complexity.

We provide an orchestration layer for the staging and usage of the various agents using open-source technologies such as LangGraph and AutoGen. By its very nature, the network supports a natural language interface for applications, which is uniquely robust and makes applications less brittle and more accessible to non-technical users.

Truveta’s AI stack

The AI stack that we have built at Truveta has the agentic intelligence network at its core. Feeding into that network are various sources contributing to Truveta Data, ranging from EHR data from our member healthcare systems, SDOH, claims, public and open-source data, and customer proprietary data. These data sources can be leveraged for pretraining models if desired, and then primarily used for fine-tuning and RAG, resulting in the development of many types of specialized AI agents that sit in the agentic network, including those using the Truveta Language Model. The agent development is done not only by Truveta ML engineers, but we also envisage that it will be done by our customers and HCS members. Thus, they too can contribute specialized agents into the agentic network.

We sincerely believe that by building their own agents in the agentic intelligence network, various parties can contribute their expertise to the knowledge base of the community and enable rapid acceleration of AI-driven applications in healthcare.

While we facilitate the reuse and sharing of knowledge expertise through the agentic intelligence network, we also care deeply about intellectual property and data rights. We provide strong controls about which data can be used for which agents, and which agents can be used in which applications, thus respecting data rights and protecting business intelligence of contributors.

Applications

Various kinds of applications can be built on top of the agentic intelligence network. We are already using it extensively for all the data harmonization applications that we host in the health care system embassies in our EHR data pipeline. We are also using the same network to power Tru, the research assistant in Truveta Studio.

We envisage that other products will be built in the future that leverage the network in novel ways, such as knowledge assistants for clinicians and patients at point of care, and assistants for healthcare operators. We hope our customers and partners will develop many custom applications using the agentic intelligence network.

While all such healthcare applications need to be deeply optimized for the specific type of persona involved in their scenarios, the underlying knowledge network need not, indeed should not, be reinvented. That ensures consistency and coherence of knowledge across all the applications. When knowledge and AI models are updated in the agentic intelligence network, those improvements can immediately flow to all applications, if so desired. At the same time, applications retain the ability to restrict and control the use of specific agents (and their specific versions), to assure auditability and stability of user experience as appropriate. We are already following this pattern in our data harmonization applications to enable regulatory-grade research.

Conclusion

In this series of articles, I articulated Truveta’s journey in building a state-of-the-art data and AI platform for healthcare research that assures quality, compliance, adaptability and collaboration. The agentic intelligence network is the culmination of this journey – we believe it gives the appropriate framework that not only enables us in pursuing our mission of Saving Lives with Data, but also empowers our members and customers in pursing their missions, and fosters collaboration across the healthcare community. That is the broader vision that I am truly excited about!

– Jay

Check out the earlier posts in this series:

Our journey of applying generative AI to advance healthcare

Delivering accuracy and explainability for AI

Ensuring privacy and compliance of AI

Healthcare AI: Earning trust and adoption by the research community