In this post, I would like to discuss the way we addressed the following challenge: How to ensure that the AI technologies used in our platform have requisite accuracy and explainability while assuring fairness and avoidance of bias

Healthcare and life science customers rely on our real-world data (RWD) platform for clinical research. We were aware from the outset that to ensure accuracy and explainability, we must invest in comprehensive model quality assessment, track performance over time, and maintain a Quality Management System (QMS/ISO 9001) for both data processing and AI models. As we executed this plan, we also saw the need to provide evidences at several distinct levels: individual models, model composition (model graph/network level), and also at the level of a study that is submitted to the FDA or other global regulatory authorities. We have developed a systematic way to support this through our infrastructure.

Philosophy of AI development with quality

In the previous blog, we demonstrated how we extensively use AI technologies for transforming semi-structured and unstructured data into structured knowledge that adheres to the Truveta Data Model (TDM) and is coded according to standard clinical ontologies.

Since it is not realistic to normalize our entire corpus of unstructured/semi-structured data for every imaginable clinical concept, we must take a pragmatic approach where we develop and evolve our AI technologies to serve incoming customer scenarios with adequate fidelity such that their research goals are met.

Also, we need to ensure that these scenarios are additive in nature – the knowledge added to TDM due to a latter scenario should expand and build on the knowledge derived in earlier scenarios.

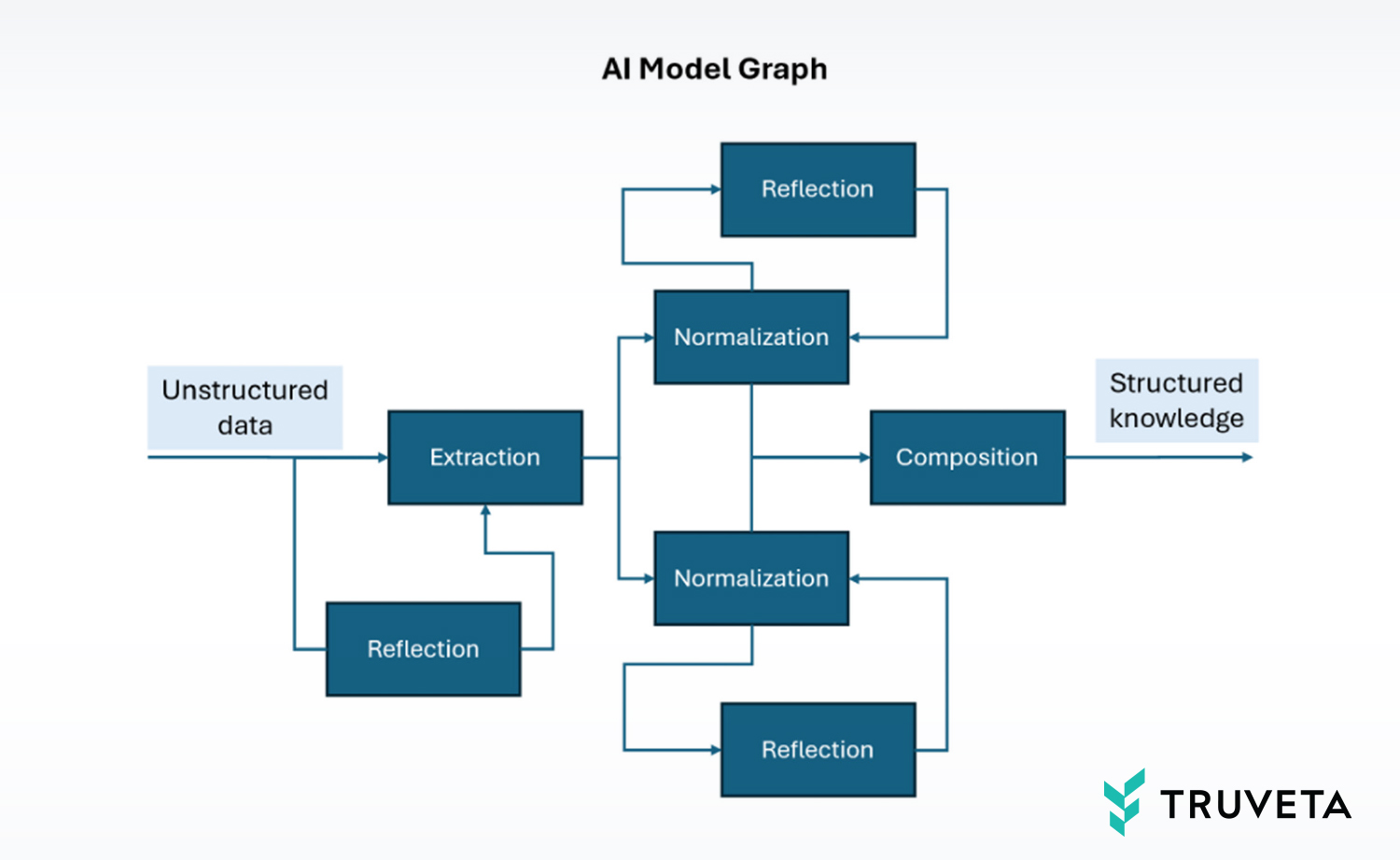

Generally speaking, customer scenarios are served by deploying one or more “AI Model Graphs,” such as the example shown above. Each graph is a network of multiple models that have been jointly optimized to process specific aspects of unstructured data and together provide a final result. This “agentic framework” approach is now accepted as the pragmatic approach with high ROI, as compared to building a monolithic model. This architecture is beneficial for building new scenarios, as it allows for the reuse of existing graph models while primarily focusing on the new specialized components.

Initially, for normalization of semi-structured EHR concepts such as conditions, medications, lab values, and observations, we built base models that were simple graphs unto themselves. These were applied to the full Truveta patient population data, seeding the TDM structured data with the core knowledge that is essential for every research study. Subsequently, we also saw the need for model graphs that may only be applied to the data of a subset of patients, such as extracting normalized cardiovascular concepts from the clinical notes of patients taking a specific medication, for example.

Irrespective of the scope of application of these graphs, all the structured knowledge they generate ultimately lands in the common pool of TDM data that all our customers query and analyze in notebooks. Because there is a possibility that the solution of one scenario may inadvertently affect another, we needed a careful orchestration of model development and deployment such that new scenarios do not degrade previously enabled use cases and, in fact, improve them where possible.

Let us see how that is achieved. Consider an exemplary timeline snippet in which four different customer research scenarios onboard to our platform, in chronological order:

- Studying cardiovascular disease in context of a particular implanted device

- Studying seizure frequency and its relationship with the use of a particular medication

- Studying symptoms and triggers for migraines

- Studying reasons for discontinuation of a particular migraine medication

In each case, an investigation by our clinical informaticists shows that the scenarios would be primarily served by extracting concepts from clinical notes of specific populations. The first three scenarios are sufficiently different such that the populations they would impact are approximately disjointed. They would be served via distinct model graphs. The fourth scenario, however, is not entirely different from the third. It is impacting the data of a subset of patients with migraine. There is an opportunity to reuse part or all of the model graph from scenario three and update it (finetune it) to serve scenario four. But there is also the possibility of negatively impacting scenario three, and we need to ensure that such a regression does not happen. This is covered by our standard operating procedures (SOPs) for AI development, so we can ensure the quality of AI models is governed by the same principles that apply to the deployment of code and services.

Quality gates for deployment

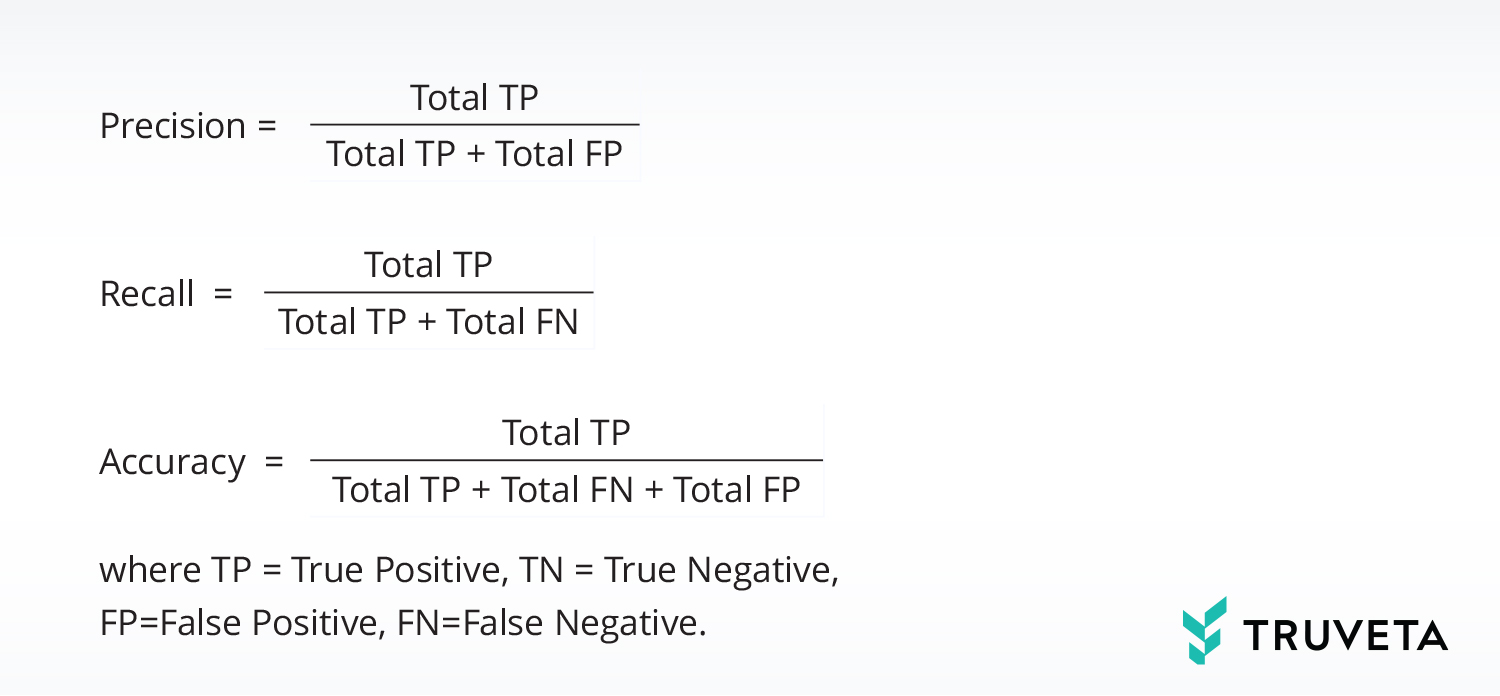

When we deliver the independent model graphs for the first three scenarios, we do a quality assurance process in which we measure the metrics of precision, recall, and accuracy of each component model in the model graph, as well as of the overall graph:

Accuracy is generally defined as the fraction of decisions that are correct. However, in our definition, we don’t consider TNs in order to get a more sensitive accuracy measure. The reason is that the vast majority of concepts in the unstructured text of a clinical note are not clinically interesting. When AI does not recall them, that is a True Negative – a correct decision, but not interesting. So, for the Accuracy metric we remove the TNs from numerator as well as denominator so we can be sensitive to the smaller number of FPs.

We verify that the quality metrics are acceptable according to benchmarks that we have devised based on comparable results in literature. Since these are independent graphs impacting disjointed populations, we know they will not regress each other. For the fourth scenario, we may choose to tune some of the models from the third model graph to leverage the pre-learned knowledge about extraction and normalization of migraine concepts. We test the updated model graph for the same quality gates of precision, recall, and accuracy. If we also choose to reuse the newly tuned models from scenario four in the graph of scenario three going forward, we need to separately perform the quality assessment of scenario three once again. In summary, we need to monitor for the potential for interference between scenarios.

Continuous monitoring

Even after we deliver model graphs for scenarios, we continue to monitor them by periodically re-running the quality assurance process to check for degradation caused by model droop due to drift in data distributions. This drift can happen for multiple reasons: organic changes in the real world, introductions of new health care system Embassies into our platform (new patient populations getting added to the system), changes in TDM schema, and changes in the way health care systems present the data to us. The Embassy-specific dependency is sufficiently acute to often warrant an Embassy-level quality assessment (in addition to an overall assessment) and fine-tuning of models for specific Embassies in some cases.

A note on accuracy and explainability

Accuracy is a trade-off of precision vs. recall. There are several degrees of freedom when optimizing this tradeoff. Each individual component (node) of the model graph can be tuned in terms of its parameters and prompts. We also have the opportunity to tune the hyperparameters, such as the model confidence threshold. However, we are not trying to optimize any one component model in isolation; rather, we want to optimize the entire model graph.

In general, our emphasis is on precision. That is, we want to achieve as much recall as possible with a high bar for precision. This is because researchers will treat the extracted and normalized concepts as factual knowledge in their research, and a lack of precision can directly affect their conclusions. In contrast, low recall has a more indirect effect. It may reduce the power of their analysis, and in some cases, induce a bias by not fully recalling certain cohorts within the population. So, we typically set the confidence threshold to achieve at least human-expert-like precision. We opt to send moderate to low-confidence cases to expert humans for their evaluation and use their annotations for active and reinforcement learning of the AI models.

In classical machine learning models such as classifiers working on well-defined features, there are techniques such as Shapley Additive Explanations that can explain the contribution of specific features in a particular inference instance. However, these are not easily applicable to the case of LLMs and generative models that work off unstructured data. Alternatively, there also exist ablation techniques that explain the dominant features in a particular decision. These can be more easily adapted to LLMs. In a practical sense, explainability can also be addressed as interpretability, describing which data elements led to which parts of the AI output.

For example, in concept extraction with encoder-only LLMs, this can be achieved by tracing the spans of concepts. In normalization models that utilize prompt engineering through retrieval augmented generation, we can provide the specific RAG-sources from ontology guidelines.

Explainability plays an important role in other areas including patient record matching, we define a similarity measure between patients based on vector representation of each patient derived from irreversible functions of their identifiable data. This ad-hoc similarity measure can then be further tuned via an AI model through supervised training. The supervision comes from the known ground truth of patients(records) who are the same person but have distinct representations in EHR systems. When the model declares a set of patients as being duplicate representations of the same person, the explanation of that decision can be given in terms of the separation of the patients in the representation space.

Regulatory-grade model lifecycle

Truveta recently announced regulatory and audit capabilities to support our customers in real-world evidence submissions to the FDA and other regulatory authorities. To enable this capability, we create evidences from controls that satisfy our SOPs described earlier. For example, model updates are versioned, and model deployments are gated on model quality assessment passing pre-defined benchmarks described earlier. Like other software engineering changes, model updates are rolled out in rings with continuous monitoring.

Engineering metrics

The quality of the AI model graphs is measured by a large array of engineering metrics. These are detailed and technical metrics that allow our AI team to closely monitor the health and quality of the pipeline. These metrics are retained as evidence for regulatory submissions. We have internal benchmarks for these engineering quality metrics based on industry literature, and they evolve as the technology improves. We publish our metrics from time to time in research publications to demonstrate how we are advancing innovation.

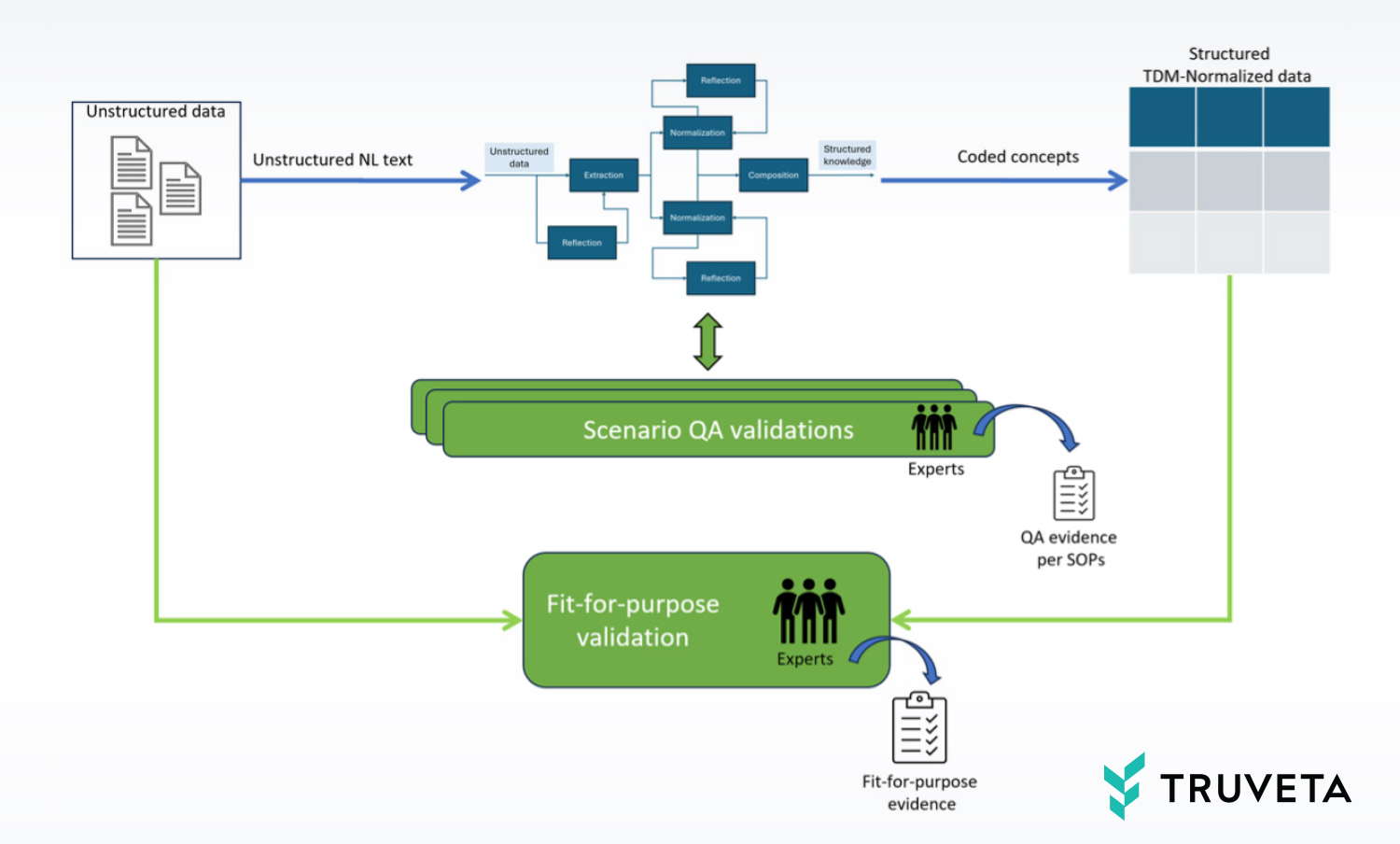

Quality assurance (QA) report

A second type of quality report is the quality assurance (QA) report. This is calculated on a “blind set” of unstructured data that is never seen by our AI developers. (In fact, this report is generated by individuals outside the AI team.) The blind set is separately annotated/decisioned by experts to produce ground truth. When an AI model graph is ready for deployment to customers, it is applied against this blind set, and the results are compared against the ground truth of expert annotations to calculate the rates of false positives and false negatives. The aggregated metrics, in terms of precision, recall, and accuracy, are compared with pre-determined benchmarks. If the AI model graph meets the high standards of the QA expert evaluation, it is deployed for the customer scenario. The QA report is recorded as evidence for that deployment and is made available to customers and auditors on request.

Logging the evidence

The engineering metrics and QA reports are the evidence for regulatory-grade design and deployment of AI models. The evidence is logged into a quality management system (QMS) along with evidence from multiple other engineering components and services. Each service produces evidence from controls to show that it is working according to its pre-defined benchmarks. Regulatory-grade clinical research done on a data snapshot extracted from Truveta Data is accompanied by evidence sets regarding the correct operation of all the systems at the time the snapshot was generated, including all the AI systems and models.

Fit-for-purpose assessment

There is an additional validation performed on customer requests to assess whether the overall mechanism of selecting patient populations for a study is working correctly per their expectations. This evaluation is inspired by the ISO standard “Medical devices — Quality management systems — Requirements for regulatory purposes” (Section 7.3.2; 7.3.6; 7.3.7). It involves the interplay of EHR data and structured elements from notes in satisfying inclusion and exclusion criteria specified by the customer. These criteria can rely on multiple custom concepts (i.e., multiple AI model graphs) as well as the correct operation of engineering systems such as search, data snapshot generation process, and data quality management. Therefore, this evaluation assesses whether the ensemble of AI model graphs and the surrounding engineering systems together enable the creation of a population that is fit for a particular research project. Hence, the name “fit-for-purpose” assessment.

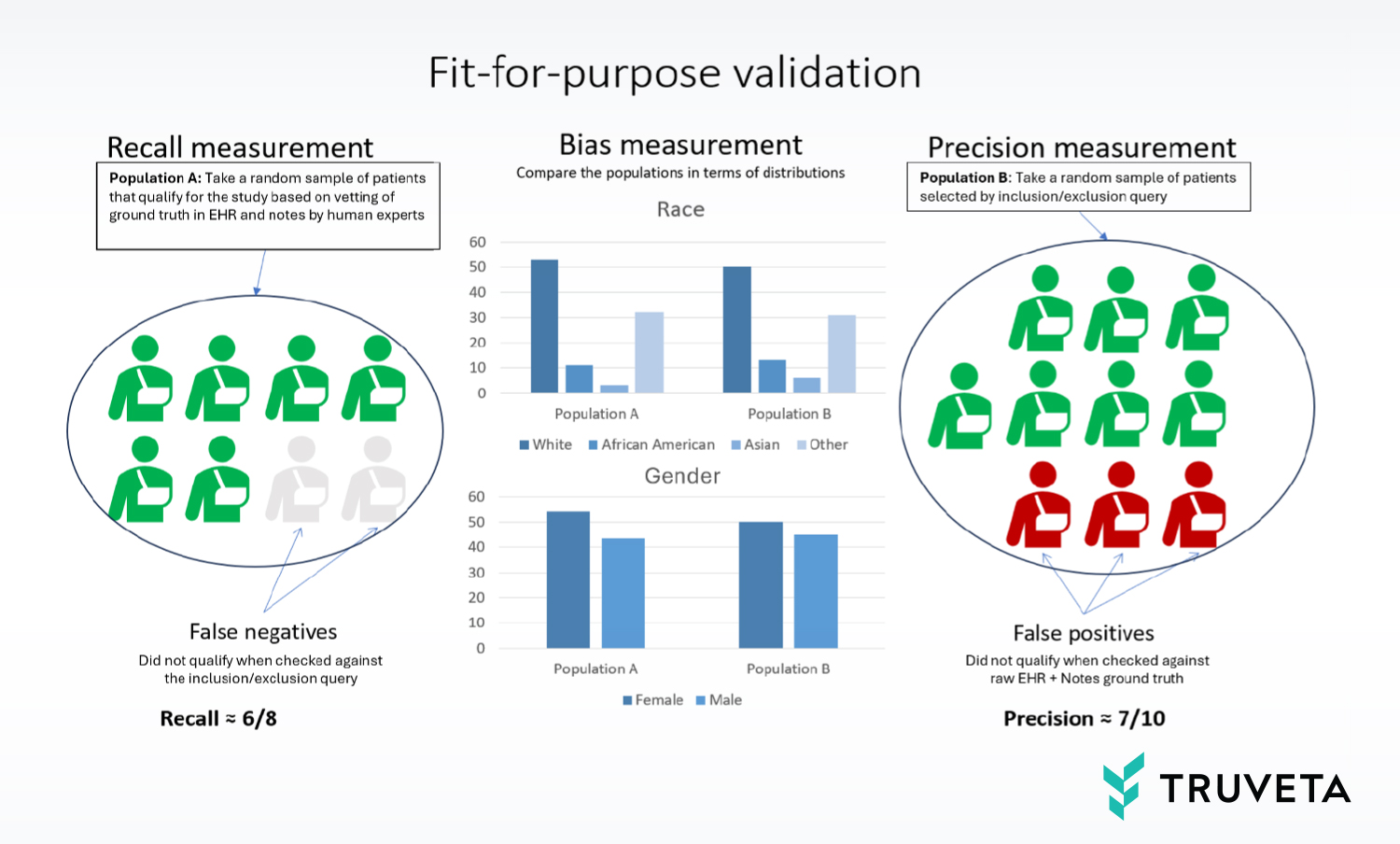

To achieve this, we use human experts to measure the recall, precision, and bias as follows:

- Recall: Sample a set of patients (a) that, based on expert assessment, are known to have the required concepts in their raw (unstructured, un-normalized) EHR data and clinical notes. See what fraction of those can be captured by inclusion/exclusion query on structured data.

- Precision: Sample a set of patients (b) from the population selected by the inclusion/exclusion query on structured data and back-check what fraction of those patients have raw notes and/or EHR data that confirm/support that qualification.

- Bias: Compare the above two populations (a) and (b) and see if they are sufficiently matched/balanced with respect to variables that need to be controlled in the study such as race and sex.

The human experts can determine whether those measurements are within an acceptable range for the research study being envisioned, which is highly dependent on the specific nature of the research question.

Conclusion

The consideration for developing and deploying AI models with consistency is a challenge that all AI providers face. As their customers fine-tune a newly available version of an off-the-shelf model, they have to do a backward check for quality and previous uses. In Truveta’s case — the use of AI models in extraction and normalization of unstructured data, — the challenge takes a new form in terms of interference between scenarios. We have described how we solve this challenge in a pragmatic way through continuous quality assessments and fit-for-purposes analyses.