Continuing with my series on AI, today I want to discuss the following question: How to make healthcare data available for clinical and life science research while leveraging AI in a safe and compliant manner?

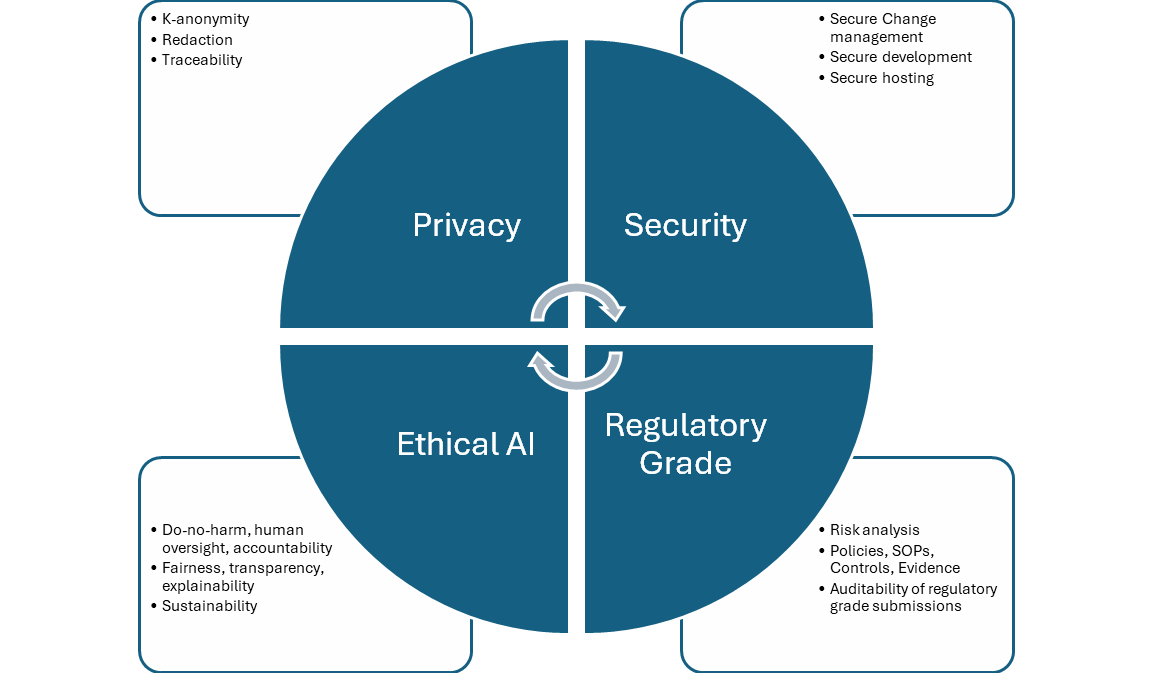

There are four aspects to compliance in healthcare analytics which are governed by distinct but interrelated regulatory standards and recommendations. They apply broadly to all engineering systems, particularly to AI, which is our focus here.

Privacy

Any health record containing information that identifies a patient is considered Protected Health Information (PHI) under HIPAA. To protect patient privacy, HIPAA places strict controls on how PHI can be stored, managed, and shared.

At the same time, HIPAA recognizes the value of using de-identified health data for research. When PHI is de-identified in accordance with HIPAA, the risk that any patient could be identified is very small.

The HIPAA Privacy Rule sets the standard for de-identification. Once the standard is satisfied, the resulting data is no longer considered to be PHI and can be disclosed outside a health system’s network and used for research purposes. The Privacy Rule provides two options for de-identification: Safe Harbor and Expert Determination. Truveta uses the Expert Determination method to de-identify data. Truveta works with experts who have experience in making Expert Determination in accordance with the Office for Civil Rights (OCR) – the government division within the US Department of Health and Human Services (HHS) responsible for HIPAA enforcement.

Redaction

In the structured and unstructured data sent by Truveta’s healthcare system members, there can be identifiers intermixed with health data such as name, address, and date of birth. We use AI models that are trained to detect identifiers in structured data, clinical notes, and images (pixels & metadata). Since these models need to be trained on PHI data, it is done in a tightly controlled PHI redaction zone. These redaction models are then deployed, and the redacted output is consumed by subsequent AI model training and scoring in our data pipelines.

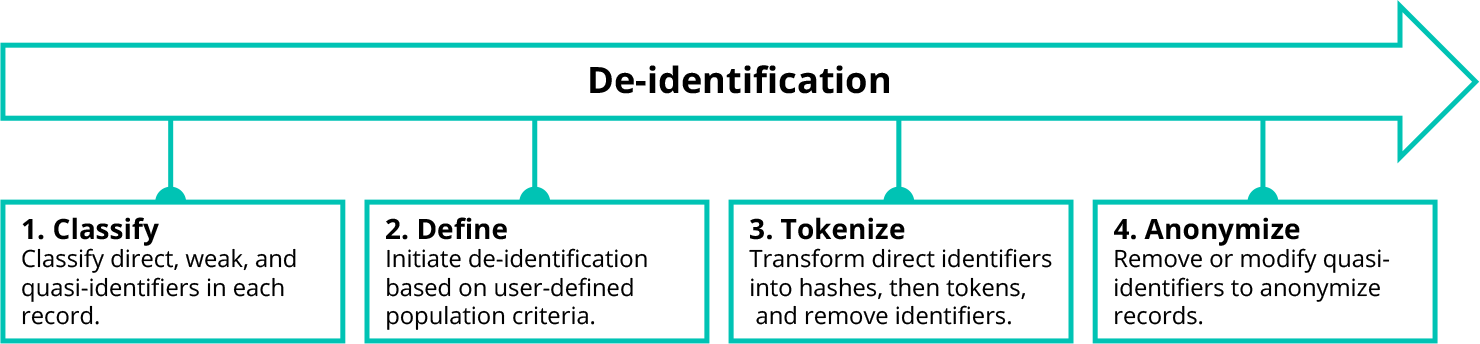

De-identification

Truveta’s de-identification system is a multi-stage process designed to effectively de-identify millions of medical records from health system members while also safely merging related data from data partners. In the case of structured data, there are four major steps in this process, which leverage AI:

When a researcher performs a study in Truveta Studio, they start by defining the population of patients to be studied. This patient population definition is sent directly to each health system’s embassy (a term we explained in the earlier blog post “Generating Data Gravity”), which identifies matching patients using the search index. The matching records are passed through Truveta’s de-identification process before any data can be accessed via Truveta Studio for use in research.

Unlike direct identifiers, weak identifiers and quasi-identifiers do not always need to be removed to preserve patient privacy. In many cases, weak identifiers and quasi-identifiers only need to be modified, replacing specific data points with less precise values.

To drive this process, Truveta uses a well-known de-identification technique called k-anonymity. We modify or remove weak identifiers and quasi-identifiers in a data set to create groups (called equivalence classes) in which at least k records look the same. The higher the k-anonymity value, the lower risk of matching a patient to a record. For this reason, we look across all health systems when building equivalence classes to provide the maximum privacy benefit and minimize the suppression effects on research.

De-identification may still suppress entire patient records or specific fields which may be of interest to a researcher. To minimize these effects, the researcher can configure the de-identification process for their study, ensuring that tradeoffs in fidelity and priority for specific weak or quasi-identifiers also meet their study goals.

Watermarking and fingerprinting

A third mechanism to support privacy is via the use of fingerprinting and watermarking algorithms to allow traceability of our data. That is, when a de-id data snapshot is produced and exported from our systems by any customer, we can unambiguously identify that the data originated from Truveta, and its provenance in terms of who created the snapshot and when. The algorithms do not modify the utility of the data from a clinical research perspective. This mechanism allows us to enforce compliant behavior of platform users in terms of taking proper precautions in using and sharing the data, as required by their contractual agreements with Truveta in order to further safeguard patient privacy.

To learn more about Truveta’s commitment to privacy, you can read our whitepaper on our approach to protecting patient privacy.

Security

Development & operations (DevOps) – general security principles

Ensuring security of the system that processes the healthcare data goes hand in hand with ensuring privacy of the data. Our information security and privacy information management systems are certified for ISO 27001, 27018, and 27701, and we have a Type 2 SOC 2® report on our controls relevant to security.

We operate in accordance with a set of security principles that apply broadly to software engineering and infrastructure DevOps:

- Development in secure environments

- Codebase and pull-requests (PRs) are orchestrated through Azure DevOps (ADO)

- Change management with approvals required at all stages

- Deployment progress through development (DEV), integration (INT), production (PROD) rings

- Automated validation suites, conformant with rated risk and impact of proposed changes

There are some specializations of these principles to AI development and operations, as discussed below.

Secure AI model development

Data

The data used to train/test/validate models, including supervision from human experts, have controls for provenance and de–identification. We can always trace back and identify which data was used to train which model. We also ensure appropriate licensing of any third-party reference data we use in AI development.

Libraries, frameworks, tools, and open–source models

The libraries (e.g., Pytorch) and frameworks and tools (e.g., KubeFlow, Tensorboard) that we use during model development are vetted and certified by our security team, and we track the lifecycle of these components so that we can respond to zero-day events as they occur. Similarly, any open–source models we use as base models for further fine tuning (e.g., from HuggingFace) are checked for malicious content.

Secure development zone

All our development work happens in well-defined security boundaries in the cloud. Access to these boundaries is via role-based access control (RBAC) which also includes the use of multi-factor authentication (MFAC) and privileged access workstations (PAW).

Secure model hosting (ML Operations – MLOps)

Once models are trained in a secure development zone, there is a model certification step done through Data Quality Reports (DQRs) which we discussed at length in my previous blog post “Delivering accuracy and explainability for AI”. After models are certified for quality, they are hosted in production environments in various other secure zones including healthcare system embassies. This deployment is done by MLOps and follows the standard software DevOps described above. The deployed models are monitored with live metrics and model droop is also assessed by periodically rerunning DQR, with recent evaluation data.

To learn more about our commitment to data security, read our whitepaper on this topic.

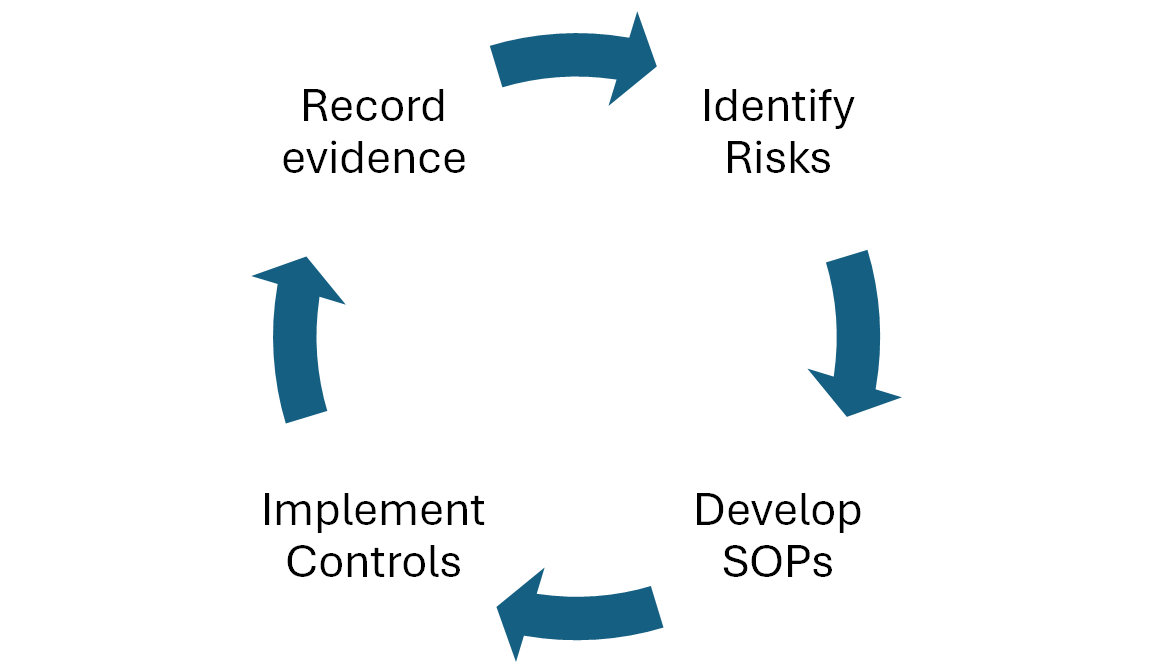

Regulatory-grade quality

The aim of regulatory grade software development is to have an auditable (i.e. verifiable and reproducible) process for proving that the data and platform tools used by our customer for doing clinical studies have quality – along the dimensions of timeliness, completeness, cleanliness, and representativeness – that is sufficient for regulatory-grade submissions to FDA and for publishing in high quality journals. The FDA recently published its final guidance on RWE and RWD and we are ensuring we are closely adhering to it.

As with the other compliance needs, this applies to all engineering components from the point of data ingestion to research platform in studio. We designed hundreds of product and process improvements to exceed FDA regulatory expectations, implemented adequate process and procedure controls aligned with FDA guidance, and created standard operating procedures (SOPs). Continuous monitoring and evidence logging ensure the system complies with these standards and that our customers can prove the integrity of the data and documentation included in their regulatory submission. For AI specifically, we have SOPs for model development and hosting, controls in the form of DQRs and certifications, and the evidence is recorded in the Quality Management System (QMS). The models in production when a data snapshot is taken by a customer can be unambiguously identified and their quality reports and certifications can be provided as evidence of regulatory-grade operation.

Truveta Data has been endorsed as research-ready for regulatory submissions, including key areas of investment such as a state-of-the-art data quality management system (QMS), third-party system audits by regulatory experts, and industry-leading security and privacy certifications.

Ethical AI

This is the fourth pillar of the compliance matrix that is rapidly gaining prominence in the AI industry following the rise of generative AI. As AI technologies have become more powerful, it has become important that their development and application is done in an ethical manner – that upholds common standards of decency, human rights, and responsibility to society at large. This is an area that is still being solidified (not fully regulated yet) and there are multiple agencies that have developed recommendations (CHAI, RenAIssance, UNESCO Ethics of AI). They tend to be along the following main principles to which Truveta is fully aligned.

- Proportionality and do-no-harm: For our use cases, this means the extraction and mapping of clinical terms should improve usability of our data for research, without adding false knowledge. We assure this through rigorous quality evaluations and fit-for-purpose evaluations.

- Safety and security: Secure AI development and hosting, as described above.

- Fairness: The aim is to ensure the system does not add or strengthen systemic bias. To achieve this, we purposefully avoid using demographic features of patients in the data harmonization models, and we use only redacted and de-id data for model development.

- Privacy: We are compliant with HIPAA requirements as described above.

- Accountability, transparency and explainability: As discussed in my previous blog post “Delivering accuracy and explainability”, we follow a rigorous system of quality assurance and monitoring for our models. Wherever models have low confidence, they choose not to provide an answer rather than risking providing a wrong answer.

- Sustainability: As discussed in previous blog post “Scaling AI models for growth”, we heavily use an agentic framework built out of relatively smaller models that are more sustainable to train and host.

- Human oversight: In Truveta’s data pipeline, AI models often aid human operators (for example providing recommendations for mappings), and always use human experts for ground truth and continuous error monitoring. Furthermore, human operators can always override AI actions. Hence there is continuous human oversight of the AI-driven data harmonization system.

Concluding remarks

Compliance of AI is a multi-faceted undertaking involving assurance of privacy, security, regulatory–grade operations, and ethical use of AI technology. We have approached this systematically and conservatively, to ensure we meet the high standard expected from us by our health system members, customers and regulators.